Blog

What is Deepfake Technology and How Are Threat Actors Using It?

According to a survey conducted in 2022, the use of detected deepfakes rose by 13 percent between 2021 and 2022. Deepfakes can be incredibly destructive when leveraged by Advanced Persistent Threats. Here’s what you need to know about this emerging technology:

What are deepfakes?

Deepfake technology is a form of artificial intelligence that employs machine learning algorithms to generate realistic media content, including synthetic audio, images, and text.

Types of deepfake technology

Deepfake technology, as explained by the Department of Homeland Security (DHS), utilizes a machine learning mechanism called Generative Adversarial Network (GAN) to fabricate deceptive images and videos.

The most common application of this technology is face swapping, a method that overlays an individual’s facial image onto another person’s body. This particular application has raised concerns due to its misuse, such as the dissemination of false information, the production of nonconsensual pornography, or the circumvention of facial recognition systems. Although deepfakes are mostly associated with images and videos, deepfake technology can also manipulate audio and text.

Here are the types of deepfakes and potential malicious uses:

Deepfake videos and images

Deepfake videos and images involve altering or fabricating content to show different behavior or information compared to the original source material. These manipulations can take various forms, ranging from harmless incidents like replacing a colleague’s picture with a celebrity’s face to more malicious applications.

Abusing video or image manipulation technology allows threat actors to target individuals for smear campaigns or spread disinformation by circulating videos or images of actions that never occurred. Furthermore, synthetic videos can deceive biometric systems, providing attackers with new avenues to exploit for their nefarious purposes.

Related reading: How deep is the depth of the deepfake?

Deepfake audio

Deepfake audio poses an increasing threat to voice-based authentication systems. However, creating a deepfake voiceprint requires sample data. Consequently, individuals with extensively available voice samples, such as celebrities and politicians, are particularly vulnerable to this tactic.

Threat actors can obtain voice samples from various sources, including social media, television, and recordings, and then manipulate them to mimic scripted content. Presently, the process of creating deepfake audio is too time-consuming to execute real-time attacks. However, organizations should remain vigilant about the evolution of deepfake audio technologies, as they continue to advance and can potentially be combined with deepfake videos. There have been several instances of audio deepfakes being used to commit various cybercrimes.

Textual deepfakes

Textual deepfakes refer to written content that appears to be authored by an actual person. Social media is a primary factor in the increasing use of textual deepfakes, in which posts can be used as part of a social media manipulation campaign or bot-generated responses. Their purpose is often to disseminate fake news and disinformation on a large scale, creating the deceptive perception that numerous individuals across various media platforms share the same belief.

Which type of deepfake is the hardest to detect?

Detecting textual deepfakes can be more challenging compared to other forms of deepfake technology. Visual and audio manipulations often exhibit noticeable signs that they are generated by AI, such as visual inconsistencies or artificial tones in voices.

However, textual deepfakes are less likely to provide clear indicators that can determine the authenticity of the content, as online users often disregard grammar and punctuation. Furthermore, the widespread use of ChatGPT enables threat actors to bypass language barriers and aids in crafting convincing phishing scripts.

The rise of deepfake technology

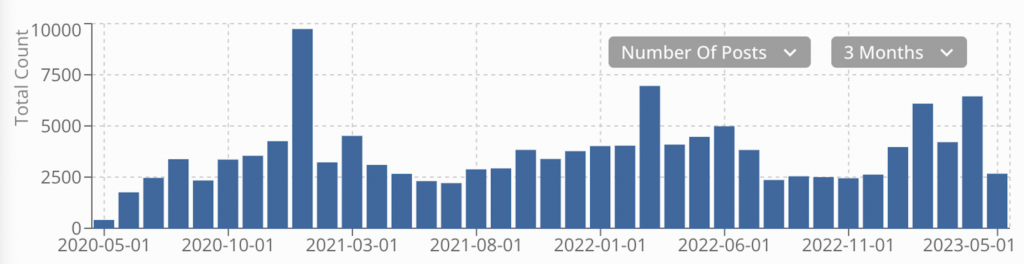

According to our collections, threat actors posted about deepfakes on illicit forums and marketplaces approximately 133,000 times within the past three years. While not all discussions are malicious, threat actors are actively discussing and learning about the emerging technology.

How cyberthreat actors can leverage deepfakes

According to a report released by the DHS, the first reported malicious application of the technology was used to create deepfake pornography. Since then, the technology has undergone substantial advancements and gained wider adoption. Depending on the threat actor’s goal, deepfakes can be leveraged in different ways.

For instance, cyber threat actors could acquire sensitive personal information on a target, acquire voice samples from the target’s social media, and then configure a deepfake of the target’s vocals to bypass authentication systems to perform an account takeover. Or, employ the script for various phishing or extortion scams.

However, the most destructive uses of deepfake technology will most likely come from Advanced Persistent Threats. Cyber influence campaigns can use deepfakes to create convincing videos and images that sway public opinion, as evident in Russia’s release of deepfake footage of Ukrainian President Volodymyr Zeklenskyy in March 2022.

The following are notable examples of discussions and events carried out by cyber threat actors leveraging deepfake technology to perform or potentially plan cybercrime:

- September 2019: A threat actor used an audio deepfake to contact a CEO of a UK-based organization tricking them into believing they worked with their parent company. The threat actor convinced the CEO to initiate a financial transfer of US$243,000. This was the first recorded instance of audio deepfake being used to conduct financial crime.

- December 2021: Threat actors used deepfake audio and fraudulent emails as a social engineering attack to scam a United Arab Emirates company out of US$35 million. The deepfake audio and emails were an elaborate scam to disguise the threat actors as the director of the company and target employees to transfer funds.

- November 2022: A Twitter user posted deepfake video of FTX founder Sam Bankman-Fried advertising a link for users impacted by the collapse of FTX, claiming to offer free cryptocurrency as compensation. However, this was a scam.

- January 2023: Multiple threads on the now-defunct Breach Forums share tips and tools to create and learn more about deepfake technology. Because the forum is linked to fraud and hacking, the discussion and passing along of tutorials for deepfakes indicates a potential rise of cyber threat actors gaining skills for deploying deepfakes to conduct cybercrime.

- March 2023: A thread on the Russian-language high-tier forum XSS discusses how to use deepfake technology to bypass OnlyFans’ facial biometric recognition to verify uploading content. Presumably, the threat actors would leverage this technology to upload AI-generated or deepfake pornography onto the website to bypass the use of original or consensual content.

Stay ahead of emerging digital threats with Flashpoint

The rise in artificial intelligence is creating an environment in which AI tools, including deepfake technologies, are easily accessible by threat actors to perform fraud, spread disinformation, and publish malicious content against victims. Sign up for a free trial to stay ahead of emerging digital threats.