The Common Vulnerability Scoring System (CVSS) is currently developed and managed by FIRST.Org, Inc. (FIRST), a US-based non-profit organization, whose mission is to help computer security incident response teams across the world.

CVSSv1 was first released in February 2005 by the National Infrastructure Advisory Council (NIAC). This initial draft was not subject to peer review or reviewed by other qualified organizations. In April 2005, NIAC selected the Forum of Incident Response and Security Teams (FIRST) to become the custodian of CVSS for future development.

Feedback from vendors utilizing CVSSv1 in production suggested there were “significant issues with the initial draft of CVSS“. Work on CVSSv2 began in April 2005 with the final specification being launched in June 2007.

What is CVSSv2?

CVSSv2 became widely used and supported by many vendors as well as vulnerability scanning tools, security detection tools, and service providers. Even with it being heavily relied on, there were problems with the standard. In 2013, Risk Based Security published an open letter to discuss concerns as work on CVSSv3 was underway. Some of the reviewed areas were:

- Insufficient granularity

- Vague and ambiguous guidelines

- The challenge of scoring authentication

- The pitfalls of “Access Complexity”

- Limitations of the “Access Vector” breakdown

- And a variety of other considerations to improve vulnerability scoring

The conclusion pointed to the need for CVSS to be overhauled. It had too many shortcomings to provide an adequate and reliable risk scoring model.

Initial work on CVSSv3 commenced in May 2012, as the FIRST Board approved the roster for the CVSS Special Interest Group (SIG) team that would oversee the development. During the preview stage RBS provided a longer report with feedback directly to the FIRST CVSS SIG.

When was CVSSv3 officially released?

June 2015, CVSSv3 was officially released, making it eight years from the last time the standard was officially updated.

As part of our VulnDB offering, we have scored tens of thousands of vulnerabilities with CVSSv2 and were looking forward to an improved standard. While improvements have been made, CVSSv3, unfortunately, also introduced new concerns and did not completely address some of the problems with CVSSv2.

We first wanted to better understand the improvements and limitations of CVSSv3 and also observe its adoption. CVSSv3 adoption has not been swift.

As part of our analysis into CVSSv3, we have decided to publish a series of blog posts over the coming month, sharing our thoughts on CVSSv3 and, hopefully, ultimately concluding if CVSSv3 provides sufficient value to justify a switch.

CVSSv3: Problems with file-based attacks

Our ultimate goal of analyzing these two scoring systems was to conclude if CVSSv3 adds sufficient value over CVSSv2—justifying a switch in our VulnDB vulnerability intelligence solution.

Often when a new system is created, it introduces new challenges. This blog post focuses on what we believe to be one of the bigger concerns introduced by CVSSv3.

Access Vector: The “Local vs. OSI Layer 3” convolutedness

One of the changes to the CVSSv3 specification is that vulnerabilities relying on file-based attack vectors e.g. against your favorite PDF reader should no longer be scored as network-based attacks (AV:N), but local attacks (AV:L) even if the attacker is not a local user. These types of attack vectors are usually referred to as “context-dependent” or “user-assisted”, meaning that the vulnerability would not be triggered without some form of user interaction.

An example of this change can be found in example 17, “Adobe Acrobat Buffer Overflow Vulnerability (CVE-2009-0658)”, of the CVSSv3 Examples document.

The reasoning provided by the CVSS-SIG is that the attacker’s path is not through OSI layer 3 (the network layer). Instead, it requires tricking a user into opening a file that ultimately resides on the victim’s system. The fact that the attack vector may be a link to a file hosted on a website that is potentially automatically opened is disregarded. That is, unless the functionality to view said file is implemented within the browser e.g. how Google Chrome and Microsoft Edge support opening of PDF files. In such cases, it should still be treated as a remote issue per example 23.

Summarizing the significance of changes

Let’s try to sum up: If a PDF file (or any other file type) is passed from the browser to a separate program e.g. opening in Adobe Reader, it becomes a local issue. If the same file type opens within a browser component, it’s still a remote issue – even if the attack vector and amount of user interaction required is exactly the same.

Using CVSSv2, this type of vulnerability scores 9.3, which translates into a ‘Critical’ issue (though this term was not used for CVSSv2; NVD just defined “Low”, “Medium”, and “High”). Using CVSSv3, the same issue now scores 7.8 i.e. a ‘High’ severity issue. The score dropping 1.5 points is due to the required user interaction now inappropriately both impacting the ‘Attack Vector (AV)’ metric as well as the new ‘User Interaction (UI)’ metric. We find it suboptimal that user interaction in these cases is not only reflected by the newly created metric for this, ‘User Interaction (UI)’, but also the attack vector, as both contribute to reducing the risk rating.

On the surface, a 1.5 point drop in the score and a severity rating lowered from ‘Critical’ to ‘High’ is perhaps not a major concern for most. That said, file-based attacks leading to code execution are generally considered quite severe and should be prioritized accordingly. Historically, they have been popular in attacks for a reason. Even if a file may end up being downloaded to the system, the attacker is rarely a local attacker, but makes use of a “remote” attack vector. The fact that many organizations will only prioritize “Critical” vulnerabilities to be patched out of cycle or outside a maintenance window makes this an important issue to understand given the potential severity.

Also, consider that a standard local privilege escalation vulnerability is now more severe (scoring 8.4) than code execution via e.g. a malicious PDF, Office, or media file. Scoring aside for a moment, when reviewing the potential risks, which vulnerability would you generally prioritize first? While local privilege escalation vulnerabilities should not be underestimated, the risk of compromise via malicious PDF files opened by unsuspecting users has proven to be a much greater threat in most environments.

To boil this down, it simply means that we believe this is our first example of CVSSv3 scoring now having organizations prioritize the wrong vulnerabilities to be fixed.

Misleading vector strings

A final concern about this change is how it leads to possible confusion conveyed by the CVSSv3 vector string. In CVSSv2, the vector string may be used as a quick way to get an overall impression of a vulnerability’s impact and attack vector without reading the associated vulnerability details (if available; we’re looking at you Oracle).

Currently the vector string for such vulnerabilities is:

CVSS:3.0/AV:L/AC:L/PR:N/UI:R/S:U/C:H/I:H/A:H = 7.8 (High)

Based on this, it becomes harder for people to determine if an issue is a local privilege escalation vulnerability requiring some user interaction or really a user-assisted vulnerability relying on a file-based attack vector.

We believe that a much simpler approach would be to score such vulnerabilities as:

CVSS:3.0/AV:N/AC:L/PR:N/UI:R/S:U/C:H/I:H/A:H = 8.8 (High)

While it remains a “High” severity issue, this proposed change we are suggesting does make scoring more straight-forward. Analysts just have to focus on the actual attack vector i.e. an attacker not being a local user and not OSI layer 3 theory. They also do not have to score the same issue differently depending on file types opening directly in a browser component versus another program. User interaction is no longer misleadingly reflected both by the “User Interaction (UI)” metric and “Attack Vector (AV)” metric. Finally, the vector string also remains informative as in CVSSv2 while avoiding confusion.

Fortunately, changing this is quite simply a guideline change and would not require changing the scoring system or the standard itself. While this seems like great news, it is critical to understand that the scoring of CVSSv3 relies heavily on the guidelines, meaning that currently all organizations that are trying to follow them, based on this concern, really are not helping your organization properly prioritize vulnerabilities.

CVSSv3: Problems with exploit reliability

Attack Complexity – Exploit Reliability / Ease of Exploitation

When studying the part of the CVSSv3 specification that describes how to assess the ‘Attack Complexity (AC)’ metric, one thing specifically stood out to us, as a bullet point states the following criteria for when to treat a vulnerability as High (AC:H):

“The attacker must prepare the target environment to improve exploit reliability. For example, repeated exploitation to win a race condition, or overcoming advanced exploit mitigation techniques.”

We previously warned the CVSS SIG about this particular criteria when providing private feedback on CVSSv3 Preview 2. While they removed some of the very problematic phrasing, they apparently decided to leave this criteria in place.

We believe that this appears to be a poor attempt at creating a score such as the Microsoft “Exploitability Index” that takes into account “Exploit Reliability”. We do not dispute that it would provide value knowing this information, but considering exploit reliability and which advanced exploit mitigation techniques need to be defeated seems to fall outside the scope of CVSS. It is especially concerning as this criteria is something we believe should not be considered in the base score.

There are a couple of reasons for us not supporting this:

- This is more appropriate to consider in the temporal metrics. In fact, it is already there to a quite fitting degree. The temporal ‘Exploit Code Maturity (E)’ metric covers not only the availability of PoCs and exploits, but how reliable these are as well. The ‘Functional (F)’ value describes an exploit that “works in most situations”, while the ‘High (H)’ value describes an exploit that “works in every situation”. We find this to adequately cover exploitation for the purpose of CVSS without adding too much complexity or impacting the base score.

- When thinking about how this impacts scoring it helps to clarify the concern. If someone did manage to create a reliable, fully working exploit for a given vulnerability, why should the “Attack Complexity (AC)” base metric reduce the overall score? No-one cares if the vulnerability was difficult or easy to exploit once an exploit is out and works reliably. With this current criteria, if scoring is followed as written in the guidelines, having the base score lowered and downplay the severity may ultimately result in improper prioritization of addressing the issue. Only the reliability of the exploit should have relevance to the risk rating and score, which is already factored into the temporal ‘Exploit Code Maturity (E)’ metric as already described.

Consider for a moment a real world example; your standard remote code execution vulnerability that has a score of 9.8 i.e. a “Critical” issue as deemed by CVSS. Based on the current guidelines if exploitation requires “overcoming advanced exploit mitigation techniques”, the same vulnerability is suddenly lowered to a score of 8.1 i.e. a “High” severity even if a fully functional and reliable exploit is available for both.

- We believe that it becomes too complex to factor in if / which advanced exploit mitigation techniques e.g. ASLR, DEP, SafeSEH must be overcome to qualify. It would be too time-consuming to assess for starters, and many people responsible for scoring CVSS may not have sufficient technical insight or qualifications to properly determine it. Unless it was to be simply assumed that e.g. all “memory corruption” type vulnerabilities automatically should be assigned High (AC:H).

In fact, amusingly it seems that the CVSS SIG itself is struggling with this criteria. When looking at provided documentation they score their own buffer overflow example for Adobe Reader, CVE-2009-0658, as Low (AC:L) in example 16. That type of vulnerability would today very likely require bypassing one or more “advanced exploit mitigation techniques”, so this just adds to the confusion and muddles when and how this criteria should be considered.

Even if we completely removed the requirement to assess the bypass of advanced exploit mitigation techniques and looked at the reliability on a more general scale, there are still a lot of unanswered questions for how to assess this such as:

- What if an exploit is reliable 95% of the time? 80%? 50%? How do we reasonably and consistently assess this metric? Where should the line be drawn for when a vulnerability qualifies for High (AC:H)? If everything that isn’t 100% should be treated as AC:H, it is clear that quite a few issues would end up being scored lower, and the impact to an organization downplayed improperly.

- What if an exploit works reliably e.g. against Linux Kernel version 4.9.4, but only half the time against version 4.4.43? Does that justify Low (AC:L) or High (AC:H) i.e. should we factor in best or worst case?

Ultimately, while it would add value to consider such criteria, in our view it has no place in the CVSSv3 base score. Even if moved into the temporal metrics, it would still end up being too complex and problematic for both the reasons previously described and also the additional concerns discussed in our paper on exploitability / priority index rating systems.

What should be done about CVSSv3?

We recommend that this whole bullet is removed immediately from the CVSSv3 specification. It is an unreasonable criteria to include. If left in, it most likely will not be honored properly during scoring or provide a back door option for anyone that wants to reduce the base score, which defeats the point of having it in the first place. The ‘Attack Complexity (AC)’ metric in our opinion should solely focus on how easy or complex it would be to launch an attack e.g. factoring in typical configurations and other conditions that may have relevance. It should not be part of the base score, and if still desired even with the issues outlined, moved to the temporal metrics.

CVSSv3: Problems with Scope

“Formally, Scope refers to the collection of privileges defined by a computing authority (e.g. an application, an operating system, or a sandbox environment) when granting access to computing resources (e.g. files, CPU, memory, etc.).”

According to the CVSSv3 Specification document, the ‘Scope (S)’ metric introduces the ability to measure the impact of a vulnerability not only to the vulnerable component. This is considered a key improvement of CVSSv3. The lack of this capability in CVSSv2 was problematic for vendors and the reasoning for this change is documented in the CVSSv3 User Guide.

When reviewing the ‘Scope (S)’ metric with people, who are new to CVSSv3, it is undoubtedly the change that leads to the most confusion. The reason is that it requires the consideration of authorization and in particular if the vulnerability’s impact crosses authorization boundaries or, said another way, into different permissions. A simple way to think about this would be code running with specific permissions within a Virtual Machine (VM) guest. The vulnerability would allow an attacker within the guest to break out of the VM and be able to take actions on the host. While the CVSS SIG has attempted to simplify this metric from early proposals, it is still not straight-forward to wrap one’s head around the issue and scoring.

Now, let us be clear, we view the ‘Scope (S)’ metric as a special beast. It does add value and provides advantages when scoring some types of vulnerabilities – specifically virtualization as one of its primary focus points. In other cases it is cause for concern. We are planning a future blog post in this series that will discuss our views on advantages of CVSSv3 over CVSSv2; we’ll revisit the ‘Scope (S)’ metric there and cover the positive aspects it provides. In this blog, we’ll just focus on the concerns.

How well do you understand your software and devices?

The ‘Scope (S)’ metric is not reserved for scoring virtualization issues, where a vulnerability exploited within a guest environment may compromise the host. It is used when dealing with the crossing of any authorization scope including sandboxes. Using it in this fashion makes sense, as the impact of a vulnerability outside a sandbox is far worse than within a sandbox. The problem is that this criteria requires analysts to have significant technical insight into how a vulnerability and vulnerable product / component actually works. This also includes understanding how it may be isolated from other components or even the system itself. And keep in mind that this is not just for a few products, but for every single scored device, software, and operating system. While vendors that are scoring their own product vulnerabilities should have this knowledge, this is, naturally, unrealistic to expect from others responsible for scoring vulnerabilities.

The concern and limitation is very nicely illustrated by an example provided by the CVSS SIG in the CVSSv3 Specification document when discussing cases where scope changes do not occur:

“A scope change would not occur, for example, with a vulnerability in Microsoft Word that allows an attacker to compromise all system files of the host OS, because the same authority enforces privileges of the user’s instance of Word, and the host’s system files.”

This is actually a pretty bad example, as, in fact, a scope change would very likely occur. Why? Since the release of Microsoft Office 2010 a sandboxing feature called “Protected View” has been included in which untrusted files are opened by default. This limits the access to e.g. files and registry keys to prevent an exploit that “allows an attacker to compromise all system files of the host OS”, unless it somehow manages to cross authorization scopes. Even if a victim disabled “Protected View” and was running Office with administrator privileges, UAC and the integrity mechanism, other authorization scopes in modern Windows versions, would likely limit a compromise of “all system files of the host OS”.

If the CVSS SIG is not aware of this sandboxing feature in a very prevalent piece of software, how can it be expected of analysts or any organization relying on CVSSv3 to score vulnerabilities affecting their infrastructure to be familiar with all these different permissions and security layers?

While that is not a fault with the CVSSv3 standard, it will be a cause of inconsistent scoring when it comes to ‘Scope (S)’ between various security tools, VDBs (vulnerability databases), and other people responsible for scoring. It is important that CVSSv3 consumers realize this limitation, meaning that the ‘Scope’ metrics will most likely only be applied in the most obvious cases and rely heavily on trusting a vendor’s assessment of the vulnerability.

Impact and scope

Just as we discussed with file-based attack vectors, the new ‘Scope (S)’ metric may also in some cases lead to misleading vector strings. The reason is how the impact metrics should be scored in the case of a scope change.

“If a scope change has not occurred, the Impact metrics should reflect the confidentiality, integrity, and availability (CIA) impact to the vulnerable component. However, if a scope change has occurred, the the Impact metrics should reflect the CIA impact to either the vulnerable component, or the impacted component, whichever suffers the most severe outcome.”

When looking at the vector string for a vulnerability with a scope change, the most reasonable expectation would be for the impact metrics to then reflect the impact outside of the scope. If considering virtualization as an example, that way it would be clear what the impact to the host is. If the impact metrics may convey either the impact inside the original scope or outside, it is impossible to quickly understand the ultimate impact to the host.

Furthermore, when a scope change occurs, the ‘Scope (S)’ metric already raises the score. It seems illogical to score based on the worst-case impact to the vulnerable or impacted component and would likely inflate the scores. Something we’ve started noticing as a problem with CVSSv3 in general and plan to discuss in a future blog post about general scoring concerns.

While we are not trying to exaggerate this as a major issue, it is just another indication that vector strings are, unfortunately, no longer as reliable when used as quick pointers, as they were in CVSSv2.

The 10.0 score truncation

What is the maximum score for a vulnerability in CVSSv2? If you said 10, you’d be correct. And the only way for a vulnerability to be scored 10 in CVSSv2 is the following vector string that describes a remotely exploitable vulnerability that has no special requirements, does not require authentication, and leads to a full compromise:

AV:N/AC:L/Au:N/C:C/I:C/A:C

Clearly, this is the same for CVSSv3, right? Not exactly. While the maximum score is still a 10, some issues are due to the ‘Scope’ metric being capped at 10 even if technically the vulnerability should be assigned a higher score! Take a look at the following four scoring examples, where the first is the equivalent of what was scored 10 in CVSSv2:

CVSS:3.0/AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:H/A:H = 9.8 (Critical)

CVSS:3.0/AV:N/AC:L/PR:N/UI:N/S:C/C:L/I:L/A:H = 9.9 (Critical)

CVSS:3.0/AV:N/AC:L/PR:N/UI:N/S:C/C:L/I:H/A:H = 10.0 (Critical)

CVSS:3.0/AV:N/AC:L/PR:N/UI:N/S:C/C:H/I:H/A:H = 10.0 (Critical)

While the impact of the 2nd example score is ‘Low’ for two of the impact metrics, it scores a 9.9. This means that the last two with higher impacts only score 0.1 higher, as they end up being capped at 10. The last two even end up scoring the same regardless of one having one impact metric of ‘Low’ and the other having all three set to ‘High’.

Having re-read the CVSSv3 Specification, User Guide, and Examples documents, we have not seen this mentioned anywhere. The User Guide document even covers significant changes to CVSSv3 over CVSSv2, but omits this part. In our view, this is a pretty significant change, and it seems to have flown completely under the radar. To be completely transparent, we only more or less accidentally noticed this issue when playing around with the CVSSv3 calculator.

In our view, it is highly problematic that the CVSSv3 risk scoring system does not properly accommodate the changes, and we believe it doesn’t properly convey risk and differentiate vulnerabilities when the ‘Scope’ metric comes into play.

Scope and its impact on XSS vulnerabilities

Believe it or not, Cross-site scripting (XSS) vulnerabilities are also impacted by the new ‘Scope (S)’ metric. According to “section 3.4. Cross Site Scripting Vulnerabilities” in the CVSSv3 User Guide, one of the problems with CVSSv2 was that:

“Specific guidance was necessary to produce non-zero scores for cross-site scripting (XSS) vulnerabilities, because vulnerabilities were scored relative to the host operating system that contained the vulnerability.”

These provisions meant that a typical reflected XSS would be scored as per example #1, phpMyAdmin Reflected Cross-site Scripting Vulnerability (CVE-2013-1937), in the CVSSv3 Examples document:

AV:N/AC:M/Au:N/C:N/I:P/A:N = 4.3

That equals a low-end ‘Medium’ severity issue, which we believe is pretty accurate for how reflected cross-site scripting vulnerabilities are perceived.

In CVSSv3, since the ‘Scope (S)’ metric is now covering XSS issues, it means they no longer require specific guidance. On the downside, such vulnerabilities now significantly increase in score with a standard reflected XSS scoring:

CVSS:3.0/AV:N/AC:L/PR:N/UI:R/S:C/C:L/I:L/A:N = 6.1

Why do we have a 1.8 point jump? Another guideline change requires ‘Confidentiality (C)’ to now be rated as ‘Low’ (C:L), which adds 1.1 points to the score. Then the ‘Scope (S)’ change further adds 0.7 on top of that. Suddenly we end up with an above-average score of 6.1 for a simple reflected XSS. While it remains a ‘Medium’ severity issue, for anyone relying on the actual scores for risk prioritization, the score is now much higher than what is publicly perceived to be the risk. This may result in such vulnerabilities being prioritized over other issues that should receive attention first.

Having the ‘Scope (S)’ metric apply to XSS vulnerabilities lead us to another obvious question: Why should a vulnerability be considered more severe, because it does not impact the host itself, but the client system?

We should all be able to agree that the risk is higher for a vulnerability in a virtualized environment that impacts the host and not just the guest. The same could be said for a vulnerability outside a sandbox instead of inside it. However, a reflected XSS poses no more risk, because the target happens to be the client system and not the server hosting the vulnerable web application.

It seems that to better reflect (see what we did there?) reality, it would be more appropriate to not apply the new ‘Scope (S)’ metric so broadly, but focus on vulnerabilities, where it actually does lead to an increased risk.

CVSSv3: Old problems remain

The Access Complexity Segregation

In early 2013, Risk Based Security published an open letter to the FIRST CVSS SIG about the problems with CVSSv2. One of the sections called: “The Access Complexity Segregation” deals with how even with three levels of “Access Complexity (AC)”, the CVSSv2 specification is written in a manner that mostly sees two of the levels being used.

“The current abstraction for Access Complexity (AC) all but ensures that only 2 of the 3 choices get used with any frequency. The bar between Medium and High is simply set too high in the current implementation. To support more accurate and granular scoring, it would be advantageous to have a 4-tier system for AC.”

Overall, it does not offer sufficient granularity when scoring vulnerabilities. One of the problems documented in that section is “The Context-Dependent Conundrum” related to user-assisted attacks. When we first learned that the FIRST CVSS SIG was adding the “User Interaction (UI)” metric to CVSSv3, we were quite excited. We believed that it should at least address part of the problem by offering more granularity in such cases. Unfortunately, our excitement was short-lived when learning that instead of keeping the three levels of scoring, CVSSv3 now only provides two: “Low (L)” and “High (H)”.

The CVSS SIG did not improve the granularity provided by the “Access Complexity (AC)” metric – or now renamed to “Attack Complexity (AC)” in CVSSv3. It is unclear why the CVSS SIG decided to strip “Medium (M)”. If it was removed to simplify the use of CVSSv3, it is quite ironic, as CVSSv3 overall adds so much more complexity and confusion compared to CVSSv2. Regardless of the reasoning behind it, we believe that this was a clear mistake.

Fortunately, it is a mistake that is pretty straight-forward to rectify by reintroducing “Medium (M)” as a scoring option even if not addressing the related concerns voiced about CVSSv2. To do that, the CVSS SIG would still need to revisit the documented requirements for each level to ensure better use of all three levels.

The cyberspace five-0 problem

Another concern we previously voiced about CVSSv2 is its inability to properly score remote vulnerabilities with a very minor impact. Basically, the problem is that standard remote vulnerabilities not requiring authentication won’t end up scoring below 5.0.

To help illustrate the issue, consider a minor, unauthenticated remote issue that allows disclosing the version of a specific product being used. That was scored 5.0 in CVSSv2 and now it has been increased to a 5.3 in CVSSv3. On the surface an increase of 0.3 seems very trivial and not even worth bringing up. However, the real point is that many people do not even consider such issues as real vulnerabilities; others consider them very minor issues, as they do provide somewhat helpful information during the reconnaissance phase of an attack.

What about a simple path disclosure issue or enumeration issue? These also both increased and are now scored 5.3. And remember, if anything we’d want them to be scored a lot lower; not higher. In fact, any standard remote, unauthenticated vulnerability with a limited impact to either the confidentiality, integrity, or availability ends up scoring 5.3 even if the impact is miniscule. CVSSv2 as well as CVSSv3 simply cannot deal with remote attacks that have minor impacts.

This means that no remote, unauthenticated issues – regardless of how miniscule the impact may be – are going to score below a “Medium” severity. In fact, when looking at CVSSv3 scoring we have found that very few vulnerabilities overall score as “Low”, which is something we’re going to discuss more in our next blog post.

From the NVD website they very accurately state that:

“Two common uses of CVSS are prioritization of vulnerability remediation activities and in calculating the severity of vulnerabilities discovered on one’s systems.”

Based on discussions with our customers and other people in the industry, we concur with NVD’s statement. However, the question must be asked: How useful is a risk scoring system really, if it overall cannot adequately deal with many classes of remote weaknesses without scoring them way too high?

As we see it, the only way to address this issue is to introduce an additional level in the impact metrics e.g. “Minor (Mi)” to be used for issues with very minor impacts border lining on security irrelevant.

CVSSv3: Every vulnerability appears to be high priority

The first thing we did when starting this blog series was to reach out to the CVSS SIG mailing list to find out if there had been any detailed analysis of CVSSv2 vs CVSSv3 base scoring. Omar Santos had written a blog post called “The Evolution of Scoring Security Vulnerabilities.”

Here are some of the key points from that post:

- The study analyzed the difference between CVSSv2 and CVSSv3 scores using the scores provided by the National Vulnerability Database (NVD). A total of 745 vulnerabilities identified by CVEs and disclosed in 2016 were analyzed.

- The goal was to identify the percentage of vulnerabilities that had a score increase or decrease, based on the two versions of the protocol (CVSSv2 vs. CVSSv3).

- Score Increase from Medium to High or Critical

- 144 vulnerabilities increased from Medium to High or Critical. That represents 19.33% of all studied vulnerabilities and 38% of the 380 Medium-scaled vulnerabilities (under CVSSv2 scores). The average base score of these vulnerabilities was 6.1 with CVSSv2 with an increase to an average base score of 8.2 when scored with CVSSv3.

- Score Increase from Low to Medium

- 35 vulnerabilities increased from Low to Medium. That represents only 4.7% of all studied vulnerabilities, but 88% of the 40 Low-scaled vulnerabilities (under CVSSv2 scores). The average base score of these vulnerabilities was 3.0 with CVSSv2 with an increase to an average base score of 5.5 when scored with CVSSv3.

In the conclusion of the post, Omar Santos states: ”The CVSS enhancements mean that we will see more vulnerabilities being rated as high or critical throughout the security industry.”

At the end of October 2016, Omar Santos published a follow-up post called “The Evolution of Scoring Security Vulnerabilities: The Sequel.”

Here are some of the key points from the post:

- The total number of vulnerabilities studied was 3862. These were vulnerabilities disclosed from January 1, 2016 thru October 6, 2016 and the source of the data is NVD.

- The average base score increased from 6.5 (CVSSv2) to 7.4 (CVSSv3).

- 44% of the vulnerabilities that scored Medium in CVSSv2 increased to High when scored with CVSSv3.

- 28% of the vulnerabilities that scored High in CVSSv2 increased to Critical when scored with CVSSv3.

- 1077 vulnerabilities moved from Low or Medium to High or Critical. That is a 52% increase in High or Critical vulnerabilities.

We were initially concerned that doing any comparison of CVSSv2 vs CVSSv3 by relying on NVD scores would be futile. The reason is that we have scored over 15,000 vulnerabilities in each of the past two years in our VulnDB product and have seen that NVD has scored a lot of issues incorrectly or inconsistently over those years with CVSSv2. We have noticed that they continue to score some vulnerabilities incorrectly using CVSSv3 as well.

Prior to reading Omar Santos’ articles, we were brainstorming our own ideas and trying to determine what we’d look at in the analysis when comparing CVSSv2 to CVSSv3 scoring provided by NVD.

- How many are the exact same score?

- What percentage of the vulnerabilities have the same Impact score?

- What percentage of the vulnerabilities have the same Exploitability score?

- What percentage of vulnerabilities stay in the same range (Low, Medium, High, Critical)?

- On average how far different are the scores?

- How many of the ratings (Low, Medium, High, Critical) are the exact same?

We wanted to know if we could add any value to the scoring conversation and decided to take a look at scoring for all of 2016. In December 2015, NVD announced that they started scoring with CVSSv3. Prior to conducting any analysis, it was important to understand how NVD does their scoring.

When looking closer at the data, we can see that NVD started scoring CVSSv3 with CVE-2015-6934, which was published on December 20, 2015 at 10:59:00 PM. That initially had us believe that we would have a full year of dual scoring for a 2016 analysis.

Once we started analysis, we saw something that caused us some immediate concern with the plan: For some unexplained reason, NVD did not fully score all vulnerabilities using both CVSSv2 and CVSSv3 from that point onward. 2016 started out with dual scoring and then suddenly there were gaps starting at CVE-2016-0401, which only provided CVSSv2 scoring for that particular vulnerability.

The good news was that it seemed to quickly get back under control with almost all CVEs having both CVSSv2 and CVSSv3 scores, so we continued on with our plan. In doing a full analysis for all of 2016 we saw that NVD scored the following:

- CVSSv2 – 5,135 vulnerabilities

- CVSSv3 – 4,929 vulnerabilities

While not every vulnerability published by NVD in 2016 had both scores, it was determined that just 209 vulnerabilities were missing CVSSv3 scoring. We were pleased to discover this, as we felt it gave us a decent sampling of both scores for further analysis of a few points.

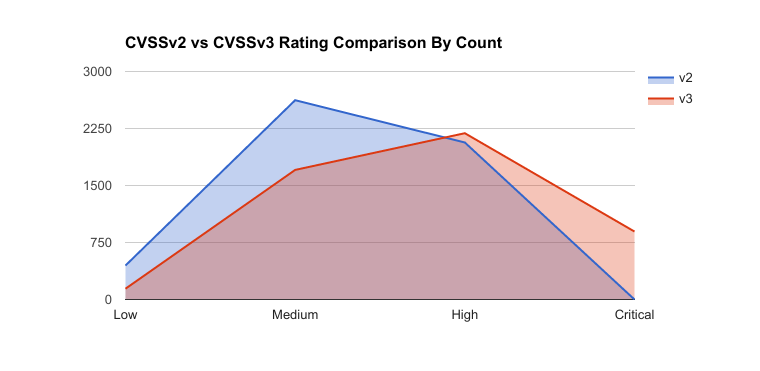

The following is a distribution of all CVSSv2 and CVSSv3 scores from 2016:

The chart immediately aligned with what we expected to see based on our analysis of the new standard. It confirmed that with a larger sample size Omar Santos’ findings are still true. The changes to CVSSv3 have increased the overall base scoring of vulnerabilities based on the numbers from 2016.

| CVSSv2 | CVSSv3 | |

| Low | 447 | 142 |

| Medium | 2,622 | 1,705 |

| High | 2,066 | 2,188 |

| Critical | – | 894 |

Here we can see the percentage breakout:

| CVSSv2 | CVSSv3 | |

| Low | 8.70% | 2.88% |

| Medium | 51.06% | 34.59% |

| High | 40.23% | 44.39% |

| Critical | – | 18.14% |

What did we see from the 2016 analysis?

- Low severity vulnerabilities decreased by 5.82% (only 142 vulnerabilities!) when scoring CVSSv3

- Medium severity vulnerabilities decreased by 16.47% when scoring CVSSv3

- High severity vulnerabilities increased by 4.16% when scoring CVSSv3

- Critical severity vulnerabilities increased by 18.14% when scoring CVSSv3

- Since Critical didn’t exist in CVSSv2, it had to increase! =)

The initial reactions that some may have are:

- What is the importance that scores have increased?

- Isn’t it a great thing that the base scores have increased?

- Doesn’t this make sure that vulnerabilities are fixed quickly?

Kymberlee Price discussed prioritization and how it matters in a 2015 Black Hat talked titled “Stranger Danger! What Is The Risk From 3rd Party Libraries?” One of the main points she discussed applies very much so to the increased base score ratings.

In the 2016 analysis, using CVSSv3 we see that High and Critical severity vulnerabilities account for 3,082 vulnerabilities (62.53 percent). We also note that almost no vulnerabilities are scored as Low severity (only 2.88 percent).

CVSSv3 scoring impacts

In August 2007, the Payment Card Industry Data Security Standard required the use of “the NVD Common Vulnerability Scoring System impact scores for use within approved scanning vendor tools.” In the document, it states the following about CVSS.

“Generally, to be considered compliant, a component must not contain any vulnerability that has been assigned a CVSS base score equal to or higher than 4.0.”

So we have to consider that PCI compliance generally dictates a failure if any vulnerabilities with a CVSS score of 4.0 or above are found. Based on that requirement and using CVSSv3, in order to be PCI compliant, an organization would have to address more than 97 percent of the vulnerabilities reported in 2016.

While security is important, and most organizations appear to be focused on fixing issues more than ever before, the reality is that there is only so much time to invest into security patching. System administrators are asking security teams to help prove that the issues they are raising are really required to be dealt with so quickly rather than waiting for routine maintenance windows to be addressed.

The analysis also underlines a problem discussed previously. There will rarely be a vulnerability with a remote attack vector that in the real-world is considered Low severity, but actually is also rated Low in CVSSv3.

In fact, this was already a problem with CVSSv2. While a minor local vulnerability may fall into the Low severity range, almost no minor vulnerabilities with a remote vector do. CVSSv3 has now made this serious failing of the CVSS scoring system even worse.

Consider a basic vulnerability that allows disclosing the version of an installed product. These are borderline vulnerabilities; many security practitioners do not consider them vulnerabilities, but the industry has generally decided that disclosing such information is bad security practice and should be considered a minor security weakness. Most would agree that such an issue should score as Low severity, but this is the CVSSv2 and CVSSv3 scores for such weaknesses:

CVSSv2: AV:N/Au:N/AC:L/C:P/I:N/A:N = 5.0 (Medium)

CVSSv3: AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:N/A:N = 5.3 (Medium)

There are many similar weaknesses with remote attack vectors that also end up in the Medium severity range instead of Low. But as mentioned, very few weaknesses with remote attack vectors are able to score as Low severity.

It can be argued and seen as a huge problem that a standard, which is entirely focused to help prioritize vulnerabilities with levels ranging from Low, Medium, High, and Critical in real-world cases rarely will score a vulnerability as Low! It could even be further argued that the standard is broken as it does not truly help organizations understand and prioritize the most critical vulnerabilities that are disclosed.

CVSSv3: Is it all bad?

To be fair, it is important to know that CVSSv3 is not all bad news. In this blog post, we are, therefore, going to discuss a few aspects of CVSSv3 that we do like and consider great improvements over CVSSv2.

Goodbye ‘Complete’ impact score, hello ‘High’

A big problem with CVSSv2 is how its impact metrics – Confidentiality (C), Integrity (I), and Availability (A) – have the three possible values named:

- None (N)

- Partial (P)

- Complete (C)

These values reflect the impact on the system itself. Due to their naming, in order for a vulnerability to e.g. be considered as having a ‘Complete (C)’ impact for any of the three impact metrics, the impact needs to be system-wide. Similarly, ‘None (N)’ means no impact at all. This results in ‘Partial (P)’ ultimately covering a very wide range from “almost no impact” to “almost a complete impact”. In reality, using this structure results in too many vulnerabilities scoring ‘Partial (P)’ even if their impacts were quite severe or insignificant. Basically, very little granularity is provided.

While CVSSv3 still struggles when dealing with remote vulnerabilities with insignificant impacts, as detailed previously, CVSSv3 has, fortunately, addressed the challenge with high risk issues.

“Rather than representing the overall percentage (proportion) of the systems impacted by an attack, the new metric values reflect the overall degree of impact caused by an attack.”

CVSSv3 now provides impact metric values of:

- None (N)

- Low (L)

- High (H)

A score of ‘High (H)’ now not only covers an impact that is considered “complete”, but anything with a significant impact. As an example, consider the following scores for a vulnerability that allows a local user to disclose an administrative user’s password on the system.

In CVSSv2, the correct score for ‘Confidentiality (C)’ would be ‘Partial (P)’, as the immediate impact of the vulnerability does not allow disclosing all information on the system, but rather just the password. In CVSSv3, the impact to ‘Confidentiality (C)’ is now ‘High (H)’ and much better reflects the real-world potential impact.

CVSSv2 = AV:L/AC:L/Au:N/C:P/I:N/A:N = 2.1 (Low)

CVSSv3 =AV:L/AC:L/PR:L/UI:N/S:U/C:H/I:N/A:N = 5.5 (Medium)

Actually, the CVSSv3 score above is not quite right, because this example nicely leads us to another improvement to the handling of impacts…

Scoring of immediate follow-up impacts

In the example given above, the impact metrics as mentioned in CVSSv2 would be: C:P/I:N/A:N i.e. partial impact to the confidentiality, but otherwise no other impacts. The reason for this is that CVSSv2 specifies that only the immediate impact should be scored, which in this case is the disclosure of the administrative user’s password.

Does that properly reflect the real threat?

No, it does not. If a local user can disclose the administrative user’s password, that local user can immediately use that exact password to elevate privileges to administrator on the system. This is not captured by CVSSv2.

The great news is that CVSSv3 does capture it properly!

According to the CVSSv3 guidelines, not only the immediate impact, but anything that can be “predictably associated with a successful attack” – without it being too far-fetched – should also be reflected by the impact metrics.

“That is, analysts should constrain impacts to a reasonable, final outcome which they are confident an attacker is able to achieve.”

In our example, the impact metrics are, therefore, scored as: C:H/I:H/A:H, making the full CVSSv3 vector string and score the following:

CVSSv3 = AV:L/AC:L/PR:L/UI:N/S:U/C:H/I:H/A:H = 7.8 (High)

While one could argue that 7.8 seems quite high for a local privilege escalation attack when comparing to some scores for remote issues, it does certainly better capture the real-world impact to the system than the CVSSv2 score of 2.1. It does not downplay the severity with the potential result of causing organizations relying on the CVSS scores to improperly prioritize the issue.

User Interaction (UI) and Privileges Required (PR)

CVSSv3 also introduces a few new metrics. Two of these worth highlighting are ‘User Interaction (UI)’ and ‘Privileges Required (PR)’. Both have added much needed granularity when scoring vulnerabilities and also makes the vector strings more useful when looking for a quick overview of a vulnerability.

The ‘User Interaction (UI)’ metric is pretty self-explanatory and specifies whether or not exploitation of a given vulnerability relies on user interaction (think of a user clicking a link!). If it does, the severity of the issue is slightly reduced, which makes sense.

The ‘Privileges Required (PR)’ metric is used to specify the permission level required for the attacker in order to exploit the vulnerability. The value can be either ‘None (N)’, ‘Low (L)’, or ‘High (H)’. The higher the permissions required to exploit a given vulnerability; the lesser the severity. We believe that this nicely reflects the real-world view of a vulnerability’s true potential severity.

We welcome both of these new metrics in CVSSv3 and believe they are an improvement even if other bad decisions were made elsewhere to reduce granularity.

Scope

We already discussed the newly introduced ‘Scope (S)’ metric in a previous blog post at length. As we mentioned in that blog post, the ‘Scope (S)’ metric introduces some problems related to how the guidelines specify its use, but it actually does also provide benefits over CVSSv2 when it comes to scoring certain types of vulnerabilities related to sandboxes and virtualized environments.

Scoring vulnerabilities with CVSSv2 is not really useful when dealing with sandboxes and virtualized environments. Analysts are forced to either ignore such limitations or try to work somewhat around them with pretty special scoring approaches. By adding the ‘Scope (S)’ metric, CVSSv3 now makes it possible to better reflect when a change in authorization scope occurs and score such cases properly. Even though there are still some minor problems when using it to score sandbox issues, it works quite nicely for virtualized environments, which was also the main focus point when this new metric was introduced.

Is CVSSv3 the magic number?

It is clear to us that the attention received underlines that even though CVSSv3 has existed since 2015, many security practitioners are still not sure about the standard and are – as expected – very slow to adopt it. It also underlines that this is a topic of interest to many, who want useful vulnerability prioritization tools. This is why it is so important that the CVSS SIG should listen to what the industry wants and needs.

Here are some of the key points summarized from the feedback we’ve received on this topic:

- It seems to be a 50/50 split between people wanting file-based attack vectors to be treated as ‘Local’ (CVSSv3 approach) vs ‘Remote’ (CVSSv2 approach). However, everyone agreed that the special exceptions for whether or not a file is opened in a browser or browser plugin vs 3rd party application has created a mess.

- Almost everyone acknowledges that CVSSv3 still has significant flaws and is not reliable. However, the majority still believes that CVSSv3 is more accurate than CVSSv2.

- There was strong agreement that consideration of exploit reliability and complexity i.e. requirement to overcome advanced exploit mitigation techniques and similar should not be a criteria for evaluating “Attack Complexity (AC)” or any other base metric. In fact, not only does the CVSS SIG disregard this criteria themselves in their provided scoring examples, it is also disregarded by pretty much all vendors scoring CVSSv3 seemingly with the exception of Microsoft. Instead, CVSSv3 users want “Attack Complexity (AC)” to only reflect the difficulty in mounting an attack e.g. considering Man-in-the-Middle (MitM) vectors and similar.

- We were asked our thoughts on the temporal and environmental scoring of CVSS. While the scope of our blog series was only the base scoring, the general thoughts shared with us were that temporal scores are broken based on how they impact base scores, but that environmental scoring could be used to offset the limitations of CVSSv3. For example, the Environmental Score Modified Attack Vector (MAV) metrics would allow organizations to choose to compensate for the issues regarding file based attacks. We believe while using environmental metrics could indeed help to address the shortcomings, that is not the intended use of the new modified base metrics.

One thing that really stood out from the feedback is that many people do not find CVSSv3 to be reliable. Consider that CVSSv3 is specifically intended as a standard to assist in prioritizing vulnerability management, so it is failing to deliver on a core goal. Yet it was also interesting to hear some people state that they find the resulting scores more accurate.

Unfortunately, while our analysis does support more accurate scores for certain types of vulnerabilities, many scores are still not remotely reflecting real-world impact. While it won’t be possible to get perfect, too many severe vulnerabilities related to file-based attacks are downplayed, while many other vulnerability types have inflated scores. This ultimately makes CVSSv3 less useful as a prioritization tool.

Our conclusion of CVSSv3

When we initially learned about some of the changes to CVSSv3, we were very hopeful. While we do acknowledge and see improvements over CVSSv2, the newly introduced problems – in partial due to guideline changes–ultimately do not make it much better than what it supersedes. At least it is not what we had hoped for and what we believe the industry needs.

CVSSv3 in its current form just has too many problems outstanding. We consider it still somewhat in the preview stage and hope that the feedback we have provided to the CVSS SIG is considered and implemented in a later revision.

As it stands, we find it hard for most organizations to justify investing time and effort converting to CVSSv3 over CVSSv2. We also believe that with the base scores increasing, it has the potential to backfire as everything may seem as high priority. The good news is that we still believe, as mentioned several times during this blog series, that it does have promise with some changes to the guidelines and tweaks to the scoring.

While we are not pleased with the current state of CVSSv3, we continue to support the development and hope to influence positive changes to the standard. In our VulnDB product we have implemented our initial phase to support CVSSv3 and have further plans to increase support for CVSSv3 scoring. We do this in part because we hope for future improvements that will make CVSSv3 more useful, but also simply because some customers are starting to ask for it, simply because it’s a newer version of the standard and not always because it’s perceived as better.

While we want to follow the standard as closely as possible, we are still working on our implementation to determine if we are to follow the standard to the letter or ignore/tweak a few of the guidelines to provide more accurate scoring, making it a more reliable prioritization tool. Similarly, we may ignore certain scoring examples given in the “Common Vulnerability Scoring System v3.0: Examples” document, as they are clearly based on wrong premises as discussed in previous blog posts in this CVSSv3 series.

As a closing statement, we like to highlight the goal of the FIRST CVSS SIG when designing CVSSv3: “CVSS version 3 sets out to provide a robust and useful scoring system for IT vulnerabilities that is fit for the future”.

Has CVSSv3 achieved this goal?

After performing an objective review of the standard, our response is very clear: “No, unfortunately not. Not only will it not be fit for the future as technology continues to change, it is not really fit for organizations’ needs today.”